This book made me think new thoughts; this is rare, so I am posting about it.

If you read nothing else in the post, read the end.

"

A New Kind of Science" is a 13-year-old book preceded, and regrettably often prejudged, by

its reputation. Many of the criticisms that have come to define the work are valid, so let's get that part out in the open. The book can be read in its entirety

here; it is enormous, both physically (~1,200 pages) and in scope, which has led to a limited and specialized readership lodging many legitimate, though mostly technical, complaints. To make matters worse, Wolfram comes across as

rather smug and boastful, taking for granted the revolutionary impact of his work, staking claims to originality that are often incorrect, and failing to adequately cite his ideological predecessors in the main body of the text. These are legitimate concerns and egregious omissions to be sure.

However, the book itself was written to be accessible to anyone with basic knowledge of math/science, and I feel it gains

so much for being simplistic and frankly written in this way. Furthermore, the biggest ideas in the book, even if not completely original or 100% convincing as-is, are still as beautiful and important as they are currently underappreciated. Even if he cannot claim unique ownership of them all, Wolfram has done an heroic job explicating these ideas for the lay reader and his book has vastly increased their popular visibility. But I fear that many may be missing out on a thoroughly enjoyable, philosophically insightful book simply on the basis of some overreaching claims and some rather technical flaws; I get the sinking feeling it's going the way of Atlas Shrugged—a big book that's cool to dismiss without ever having read.

I'm not going to get into the specific criticisms; suffice it to say that

there are issues with the book, though Wolfram has

gone to some trouble to defend his positions. But this notorious reception, coupled the fact that the book weighs in at almost 6 pounds, had kept me and surely many others from ever giving it a proper chance. This post is not meant to be an apology, and neither is it intended to be a formal book review. Rather, I am going to show you several things that I took away from my reading of it that I feel

very grateful for, regardless of the extent to which they represent any sort of paradigm shift, or even anything new to human inquiry. Lots of these ideas were very new to me, and they were presented so well that I feel I have ultimately gained a new perspective on many issues, including life itself, which I have been trying sedulously for years to better understand.

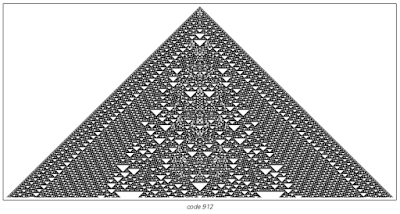

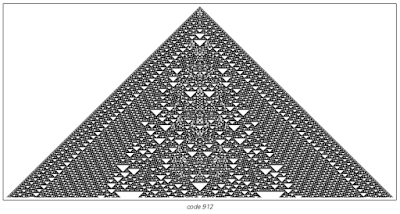

To attempt to write a general review this book would be quite difficult, for its arguments depend so much upon

pictures (of which there are more than 1000!), careful explanations, and repeated examples to build up intuition for how very simple rules can produce complex behavior, and how this fact plausibly accounts for many phenomena in the natural and physical world (indeed, perhaps the universe itself). Wolfram uses this intuition to convey compelling explanations of space and time, experience and causation, thinking, randomness, free will, evolution, the Second Law of thermodynamics, incompleteness and inconsistency in axiom systems, and much more. I will be talking about most of the non-math/physicsy stuff in this post, because most of it's beyond my ken.

|

| This will make a little more sense later on |

To begin with, the first 5 to 8 chapters are nothing if not eye-opening; they could

and should be read by all high-schoolers who are interested in science, and unless you are a scientist yourself I guarantee you will gain many new insights into some fundamental issues.

Some of the physics (ch. 9) got a little heavy for me, but this may well

be the most interesting part of the book for many. The

final chapter (12) was what did it for me personally.

In this long last chapter, the main thesis of the book is driven home. It is as follows: the best (and indeed, perhaps the only) way to understand many systems in nature (and indeed, perhaps nature itself) is

to think in terms of simple programs instead of mathematical

equations; that is, to view processes in nature as performing rule-based computations. Simple computer programs can explain, or at least mimic, natural phenomena that

have so far eluded mathematical models such as differential equations; Wolfram argues that nature is ultimately inexplicable by these traditional methods.

He demonstrates how very simple programs can produce complexity and randomness; he argues that because simple programs must be ubiquitous in the natural world, they are responsible for the complexity and randomness we observe in natural systems. He shows how idealized model programs like cellular automata can mimic in shockingly exact detail the behavior of phenomena which science has only tenuously been able to describe: crystal growth (e.g., snowflakes), fluid turbulence, the path of a fracture when materials break, biological development, plant structures, pigmentation patterns...thus indicating that such simple processes likely underlie much of what we observe.

Indeed, he makes a case (

originally postulated by Konrad Zuse) that the universe itself is fundamentally rule based, and essentially one big ongoing computation of which everything is a part. It gets a little hairy, but in

chapter 9 Wolfram discusses how the concept of causal networks can be used to explain how

space, time, elementary particles, motion, gravity, relativity, and

quantum phenomena all arise. Indeed, he argues that causal networks can

represent everything that can be observed, and that all is defined in

terms of their connections. This is predicated on the belief that there are no continuous values in nature; that is to say, that nature is fundamentally discrete. There were a lot of intriguing

ideas here, but I cannot go into them all right now. There does seem to be a reasonable case to be made for some kind of of

digital physics. I am way out of my league here though, so I'll stop. Check out that wikipedia article!

Cellular automata and other related easy-to-follow rule-based systems are used to demonstrate, or at least to hint at, most of these claims. If you haven't seen these before, check out that link: it takes you to Wolfram's own one-page summary of how these things work. In fact, I'm going to cut-and-paste most of it below. But here's a brief description: imagine of a row of cells that can be either black or white. You start with some initial combination of black and/or white cells in this row; to get the next row, you

apply a set of rules to the original cells which tells you what color cells in the next row should be based on the colors of the original cells above. The rules that determine the color of a new cell are based on the colors of three cells: the cell immediately above it and the cells to the immediate right and left of the one above it. Thus, the color of any given cell is affected only by itself and its immediate neighbors. Simple enough, but

those neighbors are in turn governed by

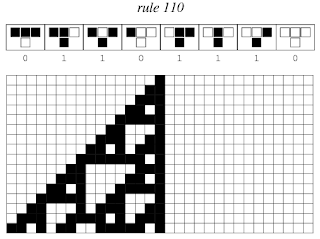

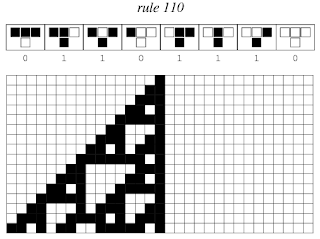

their neighbors, and those neighbors by their neighbors, etc, so that the whole thing ends up being highly interconnected. When you repeatedly apply a given rule and step back to observe the collective behavior of all the cells, large-scale patterns can emerge. You often get simple repetitive behavior (like the top picture below) or nested patterns (second picture below). However, sometimes you find behavior that is random, or some mixture of random noise with moving structures (last picture below).

Look at the picture just above; the left side shows certain regularities, but the right side exhibits random behavior (and has indeed been used for practical random number generators and encryption purposes). How might one predict the state of this system after it has evolved for a given number of time-steps? (This is an important "exercise left to the reader" so think about it before reading on).

The 'take-home' here is that sometimes simple rules lead to behavior that is complex and random, and the lack of regularities in these systems defy any short description using mathematical formulas. The only way to know how that sucker right there is going to behave in 1,000,000,000 steps is to run it and find out.

If you like looking at pictures like this one, you should definitely check out the book. I read it digitally but I ordered a physical copy as soon as I finished because man, what a terrific coffee-table book this thing makes!

Computational universality

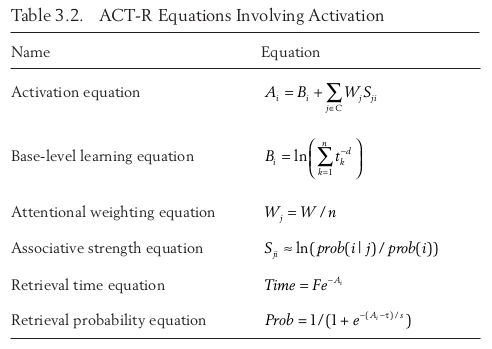

Now here's where things got really interesting for me. Unless you have studied computer science (which I honestly really haven't), you might be surprised to find out that certain combinations of rules, like those shown above, can result in systems that are capable of performing

any possible computation and emulating

any other system or computer program (which I honestly kind of was). Indeed, the computer you are reading this on right now has this capability. Hell, your microwave probably has this capability; given enough memory, it could run any program or calculate any function provided the function is able to be computed at all.

This idea, called universal computation, was developed by Alan Turing in the 1930s: a system is said to be "universal" or "Turing complete" if it is able to perform any computation. If a system is universal, it must be able to emulate any other system, and it must be able to produce behavior that is as complex as that of any other system; knowing that a system is universal implies that the system can produce behavior that is arbitrarily complex.

When studying the 256 rule-sets that generate the elementary cellular automata, Wolfram and his assistant Matthew Cook showed that a couple of them (

rule 110 and relatives) could be

made to perform any computation; that is, a couple of these extremely simple systems, among the most basic conceivable types of programs, were shown to be universal.

|

| Rule 110 from a single black cell (16 steps; see 250 steps below) |

In general, this itself is not new knowledge;

von Neumann was the first to show that a cellular automaton could be a universal computer, and it was known that other simple devices could support universal computation. However, this was the simplest instantiation of universality yet discovered, and Wolfram uses this to argue that the phenomenon must indeed be quite more widespread than originally thought, and indeed very common in nature. While most basic sets of rules generate very simple behavior (like the first and second rules pictured above), past a certain threshold you get universality, where a system can emulate any other system by setting up the appropriate initial conditions, like rule 110:

How universality can actually be achieved with cellular automata in practice is

described with great clarity in the book, but it would be too complicated to get into here. Pretty neat though! Wolfram goes on to show how universality is instantiated in Turing machines, cellular automata, register machines, and substitution systems, by showing how each one can be made to emulate the others by setting up appropriate initial conditions, despite great differences in their underlying structure.

"It implies that from a computational point of view a very wide variety of systems, with very different underlying structures, are at some level fundamentally equivalent...every single one of these systems is ultimately capable of exactly the same kinds of computations."

Any kind of system that is universal can perform the same computations; as soon as one gets past the threshold for universality, that's all. Things can't get more complex. It doesn't matter how complex the underlying rules are; one universal system is equivalent to any other, and adding more to its rules cannot have any fundamental effect. He goes on to say,

"...my general expectation is that more or less any system whose behavior is not somehow fundamentally repetitive or nested will in the end turn out to be universal."

This and related research led Wolfram to postulate his "new law of nature", the Principle of Computational Equivalence: that since universal computation means that one system can emulate any other system, all computing processes are equivalent in sophistication, and this universality is the upper limit on computational sophistication.

"No system could be constructed in our universe that is capable of more complex computations than any other universal system; no system can carry out computations that are more sophisticated than those carried out by a Turing machine or cellular automaton."

Another way of stating this is that there is a fundamental equivalence between many different kinds of processes, and that all processes which are not obviously simple can be viewed as computations of equivalent sophistication, whether they are man-made or spontaneously occurring. When we think of computations, we typically think of carrying out a series of rule-based steps to achieve a purpose, but computation in fact much broader, and as Wolfram would argue, all-encompassing. Thus, as in cellular automata, the process of any system evolving is itself a computation, even if its only function is to generate the behavior of the system. Thus, all processes in nature can be thought of as computations; the only difference is that "the rules such processes follow are defined not by some computer program that we as humans construct but rather by the basic laws of nature."

Wolfram goes on to suggest that any instance of complex behavior we observe is produced by a universal system.

"I suspect that in almost any case where we have seen complex behavior... it will eventualy be possible to show that there is universality. And indeed... I believe that in general there is a close connection between universality and the appearance of complex behavior."

"Essentially any piece of complex behavior that we see corresponds to a kind of lump of computation that is at some level equivalent."

He argues that this is why some things appear complex to us, while other things yield patterns or regularities that we can perceive, or which can be described by some some formal mathematical analysis:

"If one studies systems in nature it is inevitable that both the

evolution of the systems themselves and the methods of perception and analysis used to study them must be processes based on natural laws. But at least in the recent history of science it has normally been assumed that the evolution of typical systems in nature is somehow much less sophisticated a process than perception and analysis.

Yet what the Principle of Computational Equivalence now asserts is that this is not the case, and that once a rather low threshold has been reached, any real system must exhibit essentially the same level of computational sophistication. So this means that observers will tend to be computationally equivalent to the systems they observe— with the inevitable consequence that they will consider the behavior of such systems complex."

Thus, the reason things like turbulence in fluids or any other random-seeming phenomena appear complex to us is that we are computationally equivalent to these things. To really understand the implications of this idea, we need bring in the closely related idea of irreducibility.

Computational irreducibility

Wolfram claims that the main concern of science has been to find ways of predicting natural phenomena, so as to have some control/understanding of them. Instead of having to specify at each step how, say, a planet orbits a star, it is far better to derive a mathematical formula or model that allows you to determine the outcome of such systems with a minimum of computational effort. Sometimes, you can even find definite underlying rules for such systems which make prediction just a matter of applying these rules.

However, there are many common systems for which no traditional mathematical formulas have been found which can easily describe their behavior. And just because you know the underlying rules, there is often no way to know for sure how the system will ultimately behave, and it can take an irreducible amount of computation to actually do this. Imagine how you would try to predict the row of black and white cells after the rule-110 cellular automaton had run for, say, a trillion steps. There is simply no way to do this besides carrying out the full computation; no way to reduce the amount of computational effort that this would require. Thus,

"Whenever computational irreducibility exists in a system it means that in effect there can be no way to predict how the system will behave except by going through almost as many steps of computation as the evolution of the system itself.

...what leads to the phenomenon of computational irreducibility is that there is in fact always a fundamental competition between systems used to make predictions and systems whose behavior one tries to predict.

For if meaningful general predictions are to be possible, it must at some level be the case that the system making the predictions be able to outrun the system it is trying to predict. But for this to happen the system making the predictions must be able to perform more sophisticated computations than the system it is trying to predict."

This is because the system you are trying to predict and the methods you are using to make predictions are computationally equivalent; thus for many systems there is no general way to shortcut their process of evolution, and their behavior is therefore computationally irreducible. Unfortunately, there are many common systems whose behavior cannot ultimately be determined at all except for through direct simulation, and thus don't appear to yield to any mathematical short description. Wolfram argues that almost any universal system is irreducible, because nothing can systematically outrun a universal system. He gives the following thought experiment:

"For consider trying to outrun the evolution of a universal system. Since such a system can emulate any system, it can in particular emulate any system that is trying to outrun it. And from this it follows that nothing can systematically outrun the universal system. For any system that could would in effect also have to be able to outrun itself."

Since universality should be relatively common in natural systems, so too will computational irreducibility, making it impossible to predict the behavior of these systems. He argues that traditional science has always relied on computational irreducibility, and that "its whole idea of using mathematical formulas to describe behavior makes sense only when the behavior is computationally reducible. This seems to impose stark limits on traditional scientific inquiry, for it implies that it is impossible to find theories that will perfectly describe a complex system's behavior without arbitrarily much computational effort.

Free Will and Determinism

The section of the book uses the idea of computational irreducibility to demystify of the age-old problem of free will in a way I find quite satisfying, even beautiful. Humans, and indeed most other animals, seem to behave in ways that are free from obvious laws. We make minute-to-minute decisions about how to act that that do not seem fundamentally predictable.

Wolfram argues that this is because our behavior is computationally irreducible; the only way to work out how such a system will behave, or to predict its behavior, is to perform the computation. This lets us have our materialist/mechanistic cake and eat it: we can admit that our behavior essentially follows a set of underlying rules with our autonomy intact, because our rules produce complexities that are irreducible and hence unpredictable.

We know that animals as living systems follow many basic underlying rules— genes are expressed, enzymes catalyze biochemical pathways, cells divide—but we have also seen how even very basic rule-sets result in universality, complexity, and computational irreducibility of the system.

"This, I believe, that is the ultimate origin of the apparent freedom of human will. For even though all the components of our brains presumably follow definite laws, I strongly suspect that their overall behavior corresponds to an irreducible computation whose outcome can never in effect be found by reasonable laws."

The main criterion for freedom in a system seems to be that we cannot predict its behavior. For if we could, then the behavior of the system would thus be predetermined. Wolfram muses,

"For as we have seen many times in this book even systems with quite simple and definite underlying rules can produce behavior so complex that it seems free of obvious rules. And the crucial point is that this happens just through the intrinsic evolution of the system—without the need for any additional input from outside or from any sort of explicit source of randomness.

And I believe that it is this kind of intrinsic process—that we now know occurs in a vast range of systems—that is primarily responsible for the apparent freedom in the operation of our brains.

But this is not to say that everything that goes on in our brains has an intrinsic origin. Indeed, as a practical matter what usually seems to happen is that we receive external input that leads to some train of thought which continues for a while, but then dies out until we get more input. And often the actual form of this train of thought is influenced by memory we have developed from inputs in the past—making it not necessarily repeatable even with exactly the same input.

But it seems likely that the individual steps in each train of thought follow quite definite underlying rules. And the crucial point is then that I suspect that the computation performed by applying these rules is often sophisticated enough to be computationally irreducible—with the result that it must intrinsically produce behavior that seems to us free of obvious laws."

Intelligence in the Universe

Wolfram has

a wonderful section about intelligence in the universe, but this post is quickly becoming quite long so I will stick to my highlights. Definitely check it out if what I say here interests you.

Here he poignantly discusses how "intelligence" and "life" are difficult to define, and how many features of commonly given definitions of intelligence (

learning and memory, communication, adaptation to complex situations, handling abstraction) and life (

spontaneous movement/response to stimuli, self-organization from disorganized material, reproduction) are in fact present in much simple systems that we would not describe as intelligent or alive.

"And in fact I expect that in the end the only way we would unquestionably view a system as being an example of life is if we found that it shared many specific details with life on Earth."

Discussing extraterrestrial intelligence, he introduced me to an idea that is probably a well-known science fiction trope, but one that genuinely surprised me. He talks about how earth is bombarded with radio signals from around our galaxy and beyond, but that these signals seem to be completely random noise, and thus they are assumed to be just side effects of some physical process. But, he notices, this very lack of regularities in the signal could actually be a sign of some kind of extraterrestrial intelligence: "For any such regularity represents in a sense a redundancy or inefficiency that can be removed by the sender and receiver both using appropriate data compression." If this doesn't make sense to you, then you will probably also enjoy his

section on data compression and reducibility. The whole book is really worth taking the time to read!

An Incredible Ending

I'm going to quote the

last few paragraphs of the book in full, because they are extremely beautiful to me and there is no way I could do them justice. If you read nothing else in this blog post, read this. Feeling the full intensity of its impact/import really depends on one having read the previous like, 800 pages, and have understood the main arguments in them, so if you are planning to read the book in its entirety you might save this part until then for greatest effect. Still, if this is the only thing you ever read by Stephen Wolfram, I think it should be this. It is a good stylistic representation of the book (the short sentences, the lucid writing, the hubris) and it is the ultimate statement of the work's conclusions. Fair warning: much of it is going to sound absolutely outrageous if you haven't read the book, and especially if you haven't read the parts of this post about universality and computational reducibility. In fact, even still it sounds kind of preposterous!

But having been preoccupied with these questions about life for many years now, this passage resonated with me deeply and immediately and I am still reeling from it. Though I am not completely convinced (though are we ever, of anything?), the ideas summarized herein constitute, at least for me personally, a singularly compelling theory of existence, of nature, of life... of everything. Granted, I am taking a lot on faith for now, but I know I will have occasion to return to these thoughts time and time again as they percolate across my lifetime; indeed, it is largely for this reason that I took the time to write this post. Well, here it is; as elsewhere in the quoted material, any emphasis is mine:

*********************************************************************

"It would be most satisfying if science were to prove that we as humans are in some fundamental way special, and above everything else in the universe. But if one looks at the history of science many of its greatest advances have come precisely from identifying ways in which we are not special—for this is what allows science to make ever more general statements about the universe and the things in it.

Four centuries ago we learned for example that our planet does not lie at a special position in the universe. A century and a half ago we learned that there was nothing very special about the origin of our species. And over the past century we have learned that there is nothing special about our various physical, chemical and other constituents.

Yet in Western thought there is still a strong belief that there must be something fundamentally special about us. And nowadays the most common assumption is that it must have to do with the level of intelligence or complexity that we exhibit. But building on what I have discovered in this book, the Principle of Computational Equivalence now makes the fairly dramatic statement that even in these ways there is nothing fundamentally special about us.

For if one thinks in computational terms the issue is essentially whether we somehow show a specially high level of computational sophistication. Yet the Principle of Computational Equivalence asserts that almost any system whose behavior is not obviously simple will tend to be exactly equivalent in its computational sophistication.

So this means that there is in the end no difference between the level of computational sophistication that is achieved by humans and by all sorts of other systems in nature and elsewhere. For my discoveries imply that whether the underlying system is a human brain, a turbulent fluid, or a cellular automaton, the behavior it exhibits will correspond to a computation of equivalent sophistication.

And while from the point of view of modern intellectual thinking this may come as quite a shock, it is perhaps not so surprising at the level of everyday experience. For there are certainly many systems in nature whose behavior is complex enough that we often describe it in human terms. And indeed in early human thinking it is very common to encounter the idea of animism:

that systems with complex behavior in nature must be driven by the same kind of essential spirit as humans.

But for thousands of years this has been seen as naive and counter to progress in science. Yet now essentially this idea—viewed in computational terms through the discoveries in this book—emerges as crucial. For as I discussed earlier in this chapter, it is the computational equivalence of us as observers to the systems in nature that we observe that makes these systems seem to us so complex and unpredictable.

And while in the past it was often assumed that such complexity must somehow be special to systems in nature, what my discoveries and the Principle of Computational Equivalence now show is that in fact it is vastly more general. For what we have seen in this book is that even when their underlying rules are almost as simple as possible, abstract systems like cellular automata can achieve exactly the same level of computational sophistication as anything else.

It is perhaps a little humbling to discover that we as humans are in effect computationally no more capable than cellular automata with very simple rules. But the Principle of Computational Equivalence also implies that the same is ultimately true of our whole universe.

So while science has often made it seem that we as humans are somehow insignificant compared to the universe, the Principle of Computational Equivalence now shows that

in a certain sense we are at the same level as it is. For the principle implies that what goes on inside us can ultimately achieve just the same level of computational sophistication as our whole universe.

But while science has in the past shown that in many ways there is nothing special about us as humans, the very success of science has tended to give us the idea that with our intelligence we are in some way above the universe. Yet now the Principle of Computational Equivalence implies that the computational sophistication of our intelligence should in a sense be shared by many parts of our universe—an idea that perhaps seems more familiar from religion than science.

Particularly with all the successes of science, there has been a great desire to capture the essence of the human condition in abstract scientific terms. And this has become all the more relevant as its replication with technology begins to seem realistic. But what the Principle of Computational Equivalence suggests is that

abstract descriptions will never ultimately distinguish us from all sorts of other systems in nature and elsewhere. And what this means is that in a sense there can be no abstract basic science of the human condition—only something that involves all sorts of specific details of humans and their history.

So while we might have imagined that science would eventually show us how to rise above all our human details what we now see is that in fact these details are in effect the only important thing about us.

And indeed at some level it is the Principle of Computational Equivalence that allows these details to be significant. For this is what leads to the phenomenon of computational irreducibility.

And this in turn is in effect what allows history to be significant—and what implies that something irreducible can be achieved by the evolution of a system.

Looking at the progress of science over the course of history one might assume that it would only be a matter of time before everything would somehow be predicted by science. But the Principle of Computational Equivalence—and the phenomenon of computational irreducibility—now shows that this will never happen.

There will always be details that can be reduced further—and that will allow science to continue to show progress. But we now know that

there are some fundamental boundaries to science and knowledge.

And indeed in the end the Principle of Computational Equivalence encapsulates both the ultimate power and the ultimate weakness of science. For it implies that

all the wonders of our universe can in effect be captured by simple rules, yet it shows that there can be no way to know all the consequences of these rules, except in effect just to watch and see how they unfold."

********************************************************************